Azure Storage Accounts with bicep

The blog is a basic Azure bicep tutorial and has been split into several parts so that it does not become too long. The previous article covers the toolchain, the basics of Azure and bicep CLI and how to deploy a custom template. In this tutorial, we dive deeper into the language and write the first code sample using bicep to create and configure Azure Blob Storage accounts. In addition to bicep code example using a storage account, the concept of modules and their performance is also explained. This is followed by further blogs on creating functions, IoT Hub and Cosmos DB.

Infrastructure we want to describe

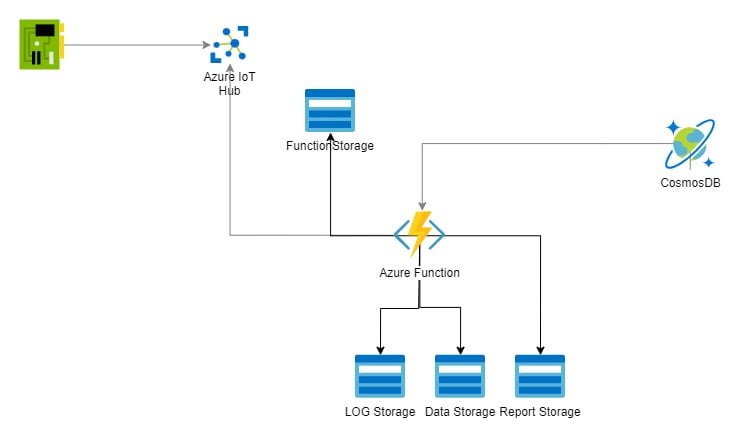

We wan’t to build a small environment consisting of four different Resource Types, namely Blob Storages, Functions, IoT Hub and Cosmos DB and wan’t to utilize different features of those Resources.

For the Blob Storages, we want to use Queues and Blob Containers, with the Functions we are going for the Y1 Dynamic tier for automatic scaling and are creating the needed Storage Account and a ApplicationInsights Resource in the Process, for IoT Hub we chose the free F1 tier, which will come with some Restrictions, but at least allows us to create one custom endpoint, for Cosmos DB we are choosing Autoscale with shared RU per container. If some, or any of these descriptions don’t mean anything to you, never mind. Keep in mind that we chose the most cost efficient variants of the resources, so deploying the resources won’t cost much and I hope it’s sufficient to give you an insight in how bicep works and demonstrate my workflow in a comprehensible way.

Bicep definition of Azure Storage Account

The following chapters contain a detailed tutorial for Azure Bicep with code examples and best practice principles. To start somewhere we want to build a storage Resource. It should be a plain Storage V2 with no special capabilities. We start by using a represantation of the Storage. Those are luckily provided for almost any resource type by Microsoft and look like this.

// The resource keyword means that we are declaring a resource, Azure bicep allows up to 800 resources for a single template

// symbolicname is a placeholder we can use to access this resource throughout our deployment

// Microsoft.Storage/storageAccounts is the type of the resource we want to deploy

// @2021-04-01 is the API version of the resource

resource symbolicname 'Microsoft.Storage/storageAccounts@2021-04-01' = {

// the

name: 'string'

location: 'string'

tags: {

tagName1: 'tagValue1'

tagName2: 'tagValue2'

}

sku: {

name: 'string'

}

kind: 'string'

extendedLocation: {

name: 'string'

type: 'EdgeZone'

}

identity: {

type: 'string'

userAssignedIdentities: {}

}

properties: {

accessTier: 'string'

allowBlobPublicAccess: bool

allowCrossTenantReplication: bool

allowSharedKeyAccess: bool

azureFilesIdentityBasedAuthentication: {

activeDirectoryProperties: {

azureStorageSid: 'string'

domainGuid: 'string'

domainName: 'string'

domainSid: 'string'

forestName: 'string'

netBiosDomainName: 'string'

}

defaultSharePermission: 'string'

directoryServiceOptions: 'string'

}

customDomain: {

name: 'string'

useSubDomainName: bool

}

encryption: {

identity: {

userAssignedIdentity: 'string'

}

keySource: 'string'

keyvaultproperties: {

keyname: 'string'

keyvaulturi: 'string'

keyversion: 'string'

}

requireInfrastructureEncryption: bool

services: {

blob: {

enabled: bool

keyType: 'string'

}

file: {

enabled: bool

keyType: 'string'

}

queue: {

enabled: bool

keyType: 'string'

}

table: {

enabled: bool

keyType: 'string'

}

}

}

isHnsEnabled: bool

isNfsV3Enabled: bool

keyPolicy: {

keyExpirationPeriodInDays: int

}

largeFileSharesState: 'string'

minimumTlsVersion: 'string'

networkAcls: {

bypass: 'string'

defaultAction: 'string'

ipRules: [

{

action: 'Allow'

value: 'string'

}

]

resourceAccessRules: [

{

resourceId: 'string'

tenantId: 'string'

}

]

virtualNetworkRules: [

{

action: 'Allow'

id: 'string'

state: 'string'

}

]

}

routingPreference: {

publishInternetEndpoints: bool

publishMicrosoftEndpoints: bool

routingChoice: 'string'

}

sasPolicy: {

expirationAction: 'Log'

sasExpirationPeriod: 'string'

}

supportsHttpsTrafficOnly: bool

}

}

source https://learn.microsoft.com/en-us/azure/templates/microsoft.storage/2021-04-01/storageaccounts/blobservices?pivots=deployment-language-bicep

Thats a lot of properties, lucky for us we decided to create a basic Storage Account and don't need most of those settings., so we can leave many of those on it's default values. If an optional value isn't present in the resource definition the default value is used, so the next step will be removing all optional properties unused by us.

After doing this, we end up with this more compact resource definition

resource storageAccount 'Microsoft.Storage/storageAccounts@2022-09-01' = {

name: storageName

location: location

sku: {

name: skuType

}

kind: storageKind

properties: {

accessTier: 'Hot'

minimumTlsVersion: 'TLS1_2'

}

}

Bicep code sample of a plain Azure storage account by Microsoft [source: learn.microsoft.com]

Thats a lot of properties, lucky for us we decided to create a basic Storage Account and don’t need most of those settings., so we can leave many of those on it’s default values. If an optional value isn’t present in the resource definition the default value is used, so the next step will be removing all optional properties unused by us.

After doing this, we end up with this more compact resource definition.

resource storageAccount 'Microsoft.Storage/storageAccounts@2022-09-01' = {

name: storageName

location: location

sku: {

name: skuType

}

kind: storageKind

properties: {

accessTier: 'Hot'

minimumTlsVersion: 'TLS1_2'

}

}

Code example with default values

Note that we could have removed even more properties and still would have gotten a valid deployment, but we chose to set minimumTlsVersion and accessTier. As a rule of thumb it is a good idea to define a resource as strictly as possible, because default values might change which might lead to unwanted behaviour after deployment.Using Parameters

Now we are almost ready to deploy our storage account.We could just enter fixed values for all the remaining keys and we could deploy our template, but this would require a code change whenever we want to deploy a slightly different version of out Storage Resource, so we just need to find a way to give us the capability to set properties for a deployment. This is where parameters come in handy. If we wanted to lets say change the name of the Storage Account, we could add the following parameter.

param storageName string

This would give us the possibility to set the value before the deployment, but sometimes we might not want to do so and are happy with a predefined string, for this there is the possibility to give a default value to a parameter.

param storageName string = 'storage${uniqueString(resourceGroup().id, deployment().name)}'

Advantage of unique name

With this small addition the deployment would give us a unique name, that we can choose to change if we want to. It additionally shows three handy features of bicep.

Parameters can have default value, they simply need to be assigned when declaraing the parameter.

Like shown in this example it is possible to insert functions and variables into strings by using the ${VARIBALE} syntax.

The uniqueness results from using the uniqueString function with resourceGroup().id and deployment().name as parameters. This function returns a 13-character string, making it random enough for a safe deployment based on your deployment name and chosen resource group. Our parameter, called storageName, might or might not be descriptive. To help the user understand the required values before deployment, we can use annotations. Additionally, storage accounts have strict name length requirements, allowing only between 3 and 24 characters. It’s important for the user to be aware of this during deployment.

We can use annotations for both of those issues

@maxLength(24)

@minLength(3)

@description('Name of the storage account: 3 to 24 alphanumeric characters')

param storageName string = 'storage${uniqueString(resourceGroup().id, deployment().name)}'

For a storage name it might be basically clear what to insert. Other parameters like the SKU type might not be self explenatory. The SKU for storages is mostly redundancy Settings. We fought a lot about it and came to the conclusion that only LRS (Redundancy only within a Location) and ZRS (Redundacy over Availability Zones, for West Europe this would be North Europe) are viable options for us. We can do this as well with another type of notation called allowed.

@description('Type of the Stock Keeping Unit, for storages those are mostly redundancy settings')

@allowed([

'Standard_LRS'

'Standard_ZRS'

])

param skuType string = 'Standard_LRS'

The allowed notation enforces the value of the parameter to be one of those defined.

So we ended Up using 4 types of notations and we will use some more in later examples.

With this we completed the definition of our storage account and are ready for a deployment.

@maxLength(24)

@minLength(3)

@description('Name of the storage account: 3 to 24 alphanumeric characters')

param storageName string = 'storage${uniqueString(resourceGroup().id, deployment().name)}'

@description('Type of the Stock Keeping Unit, for storages those are mostly redundancy settings')

@allowed([

'Standard_LRS'

'Standard_ZRS'

])

param skuType string = 'Standard_LRS'

@description('Location for the storage resource')

param location string = resourceGroup().location

@description('Type of the storage account')

@allowed([

'StorageV2'

])

param storageKind string = 'StorageV2'

resource storageAccount 'Microsoft.Storage/storageAccounts@2022-09-01' = {

name: storageName

location: location

sku: {

name: skuType

}

kind: storageKind

properties: {

accessTier: 'Hot'

minimumTlsVersion: 'TLS1_2'

}

}

Bicep code sample for complete definition of an Azure storage account

Bicep modules for deployment of an Azure storage Account

Unfortunately, this doesn’t help us much. First, the Storage Accounts would still require lots of manual configuration, like storage containers or queues. Additionally, we would need to make three deployments to reach the environment we sketched at the beginning. So, we’ll start with a new Bicep file and a few new concepts.

First, we will create a new file called main.bicep. This file will hold our complete resource definitions for the deployment. It won’t include everything because we will reuse what we have already built. However, this is the file we will use for building and deploying our environment.

Azure Bicep supports modules that we will use in this tutorial. This means we can reference Bicep files for deployment. We will do this with our storage.bicep file. We don’t want to waste the work we put into creating it. Additionally, this gives us the opportunity to explore a new concept of the language.

Bicep modules can be defined like this:

// Storage Deployment

module storagesModule './storages.bicep' = {

name: 'StorageDeployment${storageAccount.name}'

params: {

storageName: storageAccount.name

location: location

}

}

Bicep code sample of an Azure storage accout with module keyword

Module keyword

In this tutorial, we will use the module keyword to specify a different Azure Bicep file as a module by naming it (storagesModule in our case) and adding the file path as a string. To use the module, we need to provide a deployment name. We use string interpolation for this and define the required parameters. In our specified Bicep file, all parameters have default values. So we wouldn’t need to provide any parameters for it to work. However, we want to set the storageName and location, so we need to define variables or parameters for those.

// Parameters for deployment

param namePrefix string = 'm2sphere'

param location string = resourceGroup().location

param env string = 'dev'

// Storage Variables

var storageAccounts = [

{

name: '${namePrefix}logstorage${env}'

}

]

Bicep code sample for Azure storage accout name and location

Instead of going with a parameter for the storage name we chose to use a name prefix parameter and an environment parameter. Again it would be good to annotate those accordingly, but we chose to skip those to save some lines and increase readability. The location parameter uses the inbuilt function to retrieve the resource groups location, apart from that nothing is new with the parameters, but we are using a variable with a new type. The storageAccounts variable is of type array containes one entry with only a name, we will extend this shortly.

Loops in Azure bicep

But first, let’s take a quick look at another new concept in this Azure Bicep tutorial: loops. It is possible in Bicep to loop over a range or elements in a collection using for loops. We now have an array for our storage accounts, so let’s utilize this functionality.

// Storage Deployment

module storagesModule './storages.bicep' = [for storageAccount in storageAccounts: {

name: 'StorageDeployment${storageAccount.name}'

params: {

storageName: storageAccount.name

location: location

containers: storageAccount.containers

queues: storageAccount.queues

}

}]

Bicep sample code to loop over Azure storage accout modules

As we see the syntax is rather simple [for element in collection : { Do Stuff }, but it gives us a big advantage, we could now create multiple storages accounts by just adding them to the array and we are doing exactly this in a bit, but we have to take a minor detour first.

Adding Queues and Containers to our Storage Account

What we can deploy so far is a bare Storage account, but want to have queues for our functions and containers to store blobs into. To get this additional functionality, we have to make some adjustments to our storages.bicep file.

First we need a blob service and a queue service.

resource blobService 'Microsoft.Storage/storageAccounts/blobServices@2023-01-01' = {

parent: storageAccount

name: 'default'

}

resource queueService 'Microsoft.Storage/storageAccounts/queueServices@2023-01-01' = {

parent: storageAccount

name: 'default'

}

We simply add those after our storage definitions. They are seperate resources and given that we deploy them after our storage account we need to reference somehow, that they are ment for the storage account we just created. To do this we set the parent property and simple give our storageAccount we chose as symbolic name for the resource definition of the storage account. This ensures that the deployment of the queue service and the blob service happens only after the deployment of the storage account and that they can be connected.

Creating the queues resource

Next we want to create the storage queues themeselves, given that we have very likely more than one, we will define an array parameter called queues for them and iterate over it using a for loop.

param queues array

resource storageQueues 'Microsoft.Storage/storageAccounts/queueServices/queues@2023-01-01' = [for queue in queues: {

name: queue.name

parent: queueService

}]

Code example creating a queues resource

We are using a parameter here, because we want to be able to set it in our main.bicep file. Also like before with the queueService we are connecting the queues with the queueService using the parent property.

Creating the container resource

For our storage container we are doing something similiar, we are defining an array parameter called containers using a for loop for the creation of the containers.

param containers array

resource storageContainers 'Microsoft.Storage/storageAccounts/blobServices/containers@2023-01-01' = [for container in containers: {

name: container.name

parent: blobService

properties: {

publicAccess: container.publicAccess

}

}]

Code example creating a container resources

The only difference here is that we are setting a specific property called public access. This means our container array must have this property.

Now, everything for our storages template is prepared. We only need to add the new required parameters to our main.bicep file.

The storageAccounts array already exists. Every storage account must now include a queues and containers array. We simply extend the currently existing variable with two arrays.

var storageAccounts = [

{

name: '${namePrefix}logstorage${env}'

containers: [

{

name: 'error'

publicAccess: 'None'

}

{

name: 'information'

publicAccess: 'None'

}

{

name: 'verbose'

publicAccess: 'None'

}

{

name: 'access'

publicAccess: 'None'

}

]

queues: [

{

name: 'processlogs'

}

]

}

Code sample extended by two arrays

Datatypes

Bicep currently supports 7 datatypes, string, int, bool, array, object, secureString and secureObject.

When we created our storageAccounts array in the beginning having only the name property, we also could have used an array definition like var storageAccounts = [ ‘${namePrefix}logstorage${env}’], but knowing that we would need additional properties we want for the little more extensive object notation.

An object can have properties defined as key value pairs. The values of those properties can be of any type and it is possible to mix types.

We are using this property now to define two arrays containing other objects.

For the queues again this is solely an array of objects containing name properties, for the containers we additionally added the public access property, like we used in the resource definition in the storages.bicep file. Unfortunately we currently still have some errors. Our storages.bicep now defines the queues and containers properties as required, we have to add those to the module definition.

// Storage Deployment

module storagesModule './storages.bicep' = [for storageAccount in storageAccounts: {

name: 'StorageDeployment${storageAccount.name}'

params: {

storageName: storageAccount.name

location: location

containers: storageAccount.containers

queues: storageAccount.queues

}

}]

Extension of the module definition to include queues and containers

As shown here values of objects can be retrieved using the . notation. We added storageAccount.containers for the containers parameter and storageAccount.queues for the queue parameter and by doing this passing the two arrays to our storages.bicep.

Azure Storage Account with bicep on the basis of the introductory example

At the beginning, we’ve shown the environment we want to build. To get a step closer to our goal we now add the missing storage account, with exception of the functions storage, which will come later once we create the infrastructure code for the functions.

We can simply add those by adding them to the storageAccounts array

// Storage Variables

var storageAccounts = [

{

name: '${namePrefix}logstorage${env}'

containers: [

{

name: 'error'

publicAccess: 'None'

}

{

name: 'information'

publicAccess: 'None'

}

{

name: 'verbose'

publicAccess: 'None'

}

{

name: 'access'

publicAccess: 'None'

}

]

queues: [

{

name: 'processlogs'

}

]

}

{

name: '${namePrefix}datastorage${env}'

containers: [

{

name: 'health'

publicAccess: 'None'

}

{

name: 'status'

publicAccess: 'None'

}

{

name: 'system'

publicAccess: 'None'

}

]

queues: [

{

name: 'processhealthdata'

}

]

}

{

name: '${namePrefix}reportstorage${env}'

containers: [

{

name: 'daily'

publicAccess: 'None'

}

{

name: 'usergenerated'

publicAccess: 'None'

}

{

name: 'publicreports'

publicAccess: 'Blob'

}

]

queues: [

{

name: 'generatereport'

}

]

}

]

Full code example for the creation of Azure storage accounts with bicep

This small change is sufficient for deploying all storages we need for our sample environment. We are done with our storages and will be going forward preparing the code for the deployment of our azure functions.

>> Work in progress/coming soon Azure bicep basics: functions

If you don’t want to miss any of our blogs in the future, simply subscribe to our newsletter. Of course, we are also happy to receive comments or ratings. This helps us understand whether our articles are helpful and relevant.